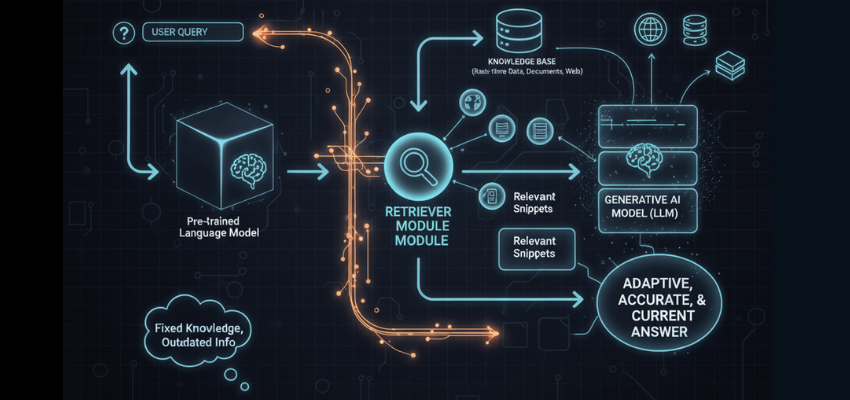

Artificial Intelligence has evolved rapidly, but generative models still struggle to stay current because they rely on pre-trained data, making their knowledge static after training. And the solution lies in Retrieval-Augmented Generation (RAG) in Generative AI addresses((i.e., solves or mitigates) this limitation by dynamically connecting large language models (LLMs) to external, authoritative data sources. This framework bridges the gap between stored intelligence and real-time adaptability, transforming generative AI from a static knowledge base into a dynamic, adaptive, and factually grounded system.

Here, we explore how RAG works and the tangible benefits it brings to AI-driven applications.

What is RAG in Generative AI?

RAG in Generative AI integrates two powerful components:

- Retrieval: Fetches up-to-date, domain-specific data from external databases, APIs, or document sources.

- Generation: Uses retrieved information to produce accurate, context-aware responses.

This integration allows AI systems to access real-time data before responding, unlike traditional models that rely solely on their training data. The result? Smarter, contextually relevant, and more adaptive AI interactions.

For example, in an e-commerce environment, a static chatbot might not recognize a new product added last week. A RAG-powered system, however, retrieves live product catalog updates, customer reviews, and inventory details to deliver more relevant responses.

Why Static Models Fall Short

Static generative models face several limitations:

- Outdated Knowledge: They can’t access new data post-training.

- Reduced Trust: Users lose confidence when AI outputs are inaccurate or outdated.

- Limited Context: Responses are based on fixed patterns, not evolving information.

RAG solves these problems by allowing generative models to “look up” real-time information, turning passive knowledge into actionable intelligence.

How RAG in Generative AI Enhances Adaptability

Here’s how Retrieval-Augmented Generation transforms static systems into adaptive intelligence:

- Real-Time Data Access: Integrates with APIs or live databases for updated context.

- Domain-Specific Knowledge: Customizes responses based on brand or product data.

- Higher Accuracy: Reduces hallucinations by referencing factual information.

- Enhanced Personalization: Adjusts answers according to user profiles and behaviors.

- Continuous Improvement: Learns from new data without retraining the entire model.

Use Case:

Imagine an online retail assistant powered by RAG in Generative AI. When a customer asks about “the best running shoes under ₹5,000,” the system retrieves current product listings, customer reviews, and stock availability, then crafts a personalized response. This makes interactions faster, more relevant, and trust driven.

Here’s a quick comparison of traditional generative AI models versus RAG-enabled systems:

| Aspect | Traditional Generative AI Models | RAG in Generative AI |

| Data Source | Static, pre-trained datasets | Dynamic, retrieves live data |

| Knowledge Updates | Requires retraining to update knowledge | Accesses latest data instantly |

| Context Awareness | Limited to training information | Enhanced by external contextual retrieval |

| Accuracy | May generate hallucinations or outdated info | Reduces errors with factual references |

| Scalability | Costly retraining for new data | Easily scalable with knowledge bases |

| Use Cases | Generalized Q&A, text generation | Adaptive systems like chatbots, search assistants, and product recommenders |

This comparison highlights why RAG in Generative AI is becoming critical for applications that demand real-time precision and context, especially in customer-facing domains like e-commerce.

Business Advantages of Adopting RAG-Driven Systems

Implementing RAG doesn’t just improve model performance; it redefines how businesses use AI for decision-making. Companies adopting RAG-enabled systems can:

- Personalized Interactions: Dynamic recommendations aligned with user preferences.

- Reduced Operational Overheads: No need for frequent retraining cycles.

- Faster Decision-Making: Real-time insights based on the latest data.

- Enhanced Customer Trust: Accurate, source-backed responses.

- Scalable Intelligence: Easily integrates new data sources as businesses grow.

By transforming static responses into dynamic ones, RAG helps businesses evolve from reactive to proactive, especially in data-driven sectors like e-commerce, healthcare, and finance.

Implementing RAG in Generative AI

Getting started with RAG in generative AI requires careful planning. Begin by selecting a suitable retrieval system, such as vector databases like Pinecone or FAISS, to store and query knowledge and integrate this with an LLM via frameworks like LangChain or Hugging Face Transformers.

- Steps to Implement:

- Index your data: Convert documents into embeddings for efficient retrieval.

- Fine-Tune Queries: Optimize retrieval algorithms to match user intent.

- Test and Iterate: Monitor performance metrics like relevance and response time to refine the system.

Open-source libraries make RAG accessible even for non-experts, allowing businesses to build adaptive AI without deep technical expertise.

Summary:

As organizations shift from static data to dynamic intelligence, RAG in Generative AI emerges as a critical enabler of accuracy, adaptability, and trust. By retrieving live, contextual data before generating responses, RAG transforms generative models from static text producers into adaptive reasoning systems.

The future of AI isn’t just about generating; it’s about understanding and evolving in real time. Businesses that embrace this shift will define the next wave of intelligent innovation.

Want to understand how AI-driven adaptability can shape your digital strategy? Connect with Priorise to explore frameworks, insights, and technologies like RAG in Generative AI that redefine the way organizations operate and innovate.